Forget GPT-4o's voice -- the real problem with AI is us

Image: HAI/Stanford U

It’s been some crazy busy weeks for AI. Google has a new text to video tool, open AI released a new GPT model that can speak, and someone thinks it’s their voice. Multiple studies have found that AI is really good at understanding humans, a whole bunch of people who worked on AI safety left Open AI, and I’ll tell you why I think we’re totally not taking this seriously enough.

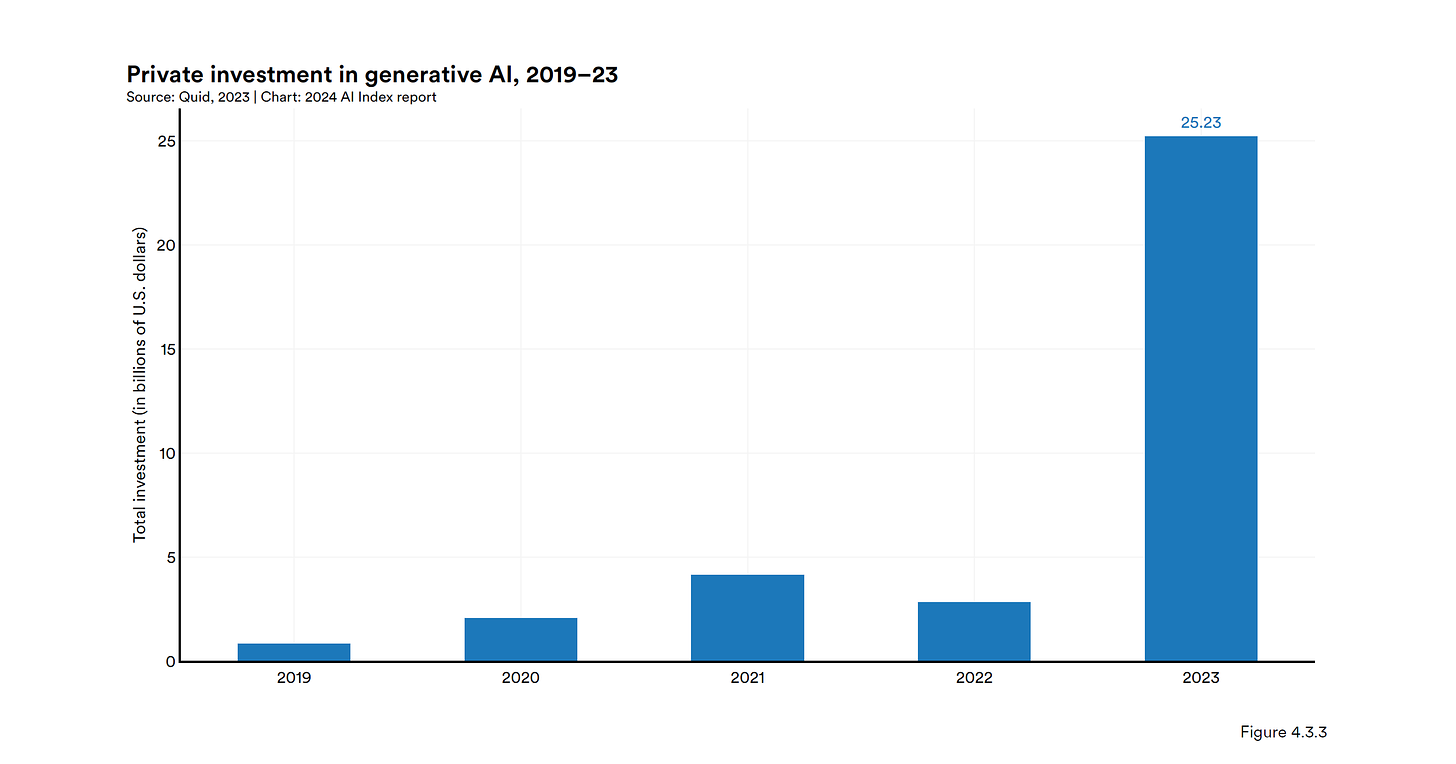

According to a recent report from Stanford University, both corporate and private investment into AI has seen a decline in 2023, but funding for generative AI models like ChatGPT increased by almost a factor 8 from 2022 to reach more than 25 billion dollars in 2023. The vast majority of that money comes from the United States. The cost of the larger models is increasing rapidly. Note that the vertical axis is a log scale. Open AI’s new GPT version cost about 78 million dollars just to train and Google's Gemini Ultra cost a whopping 191 million.

But, hey, at least we’re getting something out of it, for example this crocheted elephant. It was produced by Google’s new text to video tool which is called Veo and can create videos at UHD up to one minute long. Unfortunately, for the EU there isn’t even a waiting list yet which is too bad. Meanwhile, here’s an image of a crocheted Einstein.

Then we have the launch of GPT-4o. The “o” stands for omni, the Latin word for “all” because the model can process text, audio, images and video. They’ve put out some very neat demos.

Did this voice sound familiar to you? The actress Scarlet Johannson thinks it’s hers. It turns out that OpenAI made her an offer for usage rights a while ago, but she declined. She thinks it still sounds very much like her. But does it? I mean listen to it yourself In any case, Johannson hired a lawyer who sent letters and OpenAI removed this voice.

Another odd thing that’s been going on at Open AI is that multiple people left, and the super alignment team has disbanded. This group of researchers was tasked with making sure that the goals and values of an artificial general intelligence would be aligned with humans, so, you know, to avoid human extinction. It seems like a good idea, but apparently the superalignment group didn’t feel like they were appreciated.

One of the people who left is Jan Leike who was head of the super alignment team, another one Ilya Sutskever, one of the co-founders of the organization who already last year raised concerns about the CEO, Sam Altman. It doesn’t seem to have been a big fallout, maybe more like an, hmm, alignment problem.

Keep reading with a 7-day free trial

Subscribe to Science without the gobbledygook to keep reading this post and get 7 days of free access to the full post archives.